When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

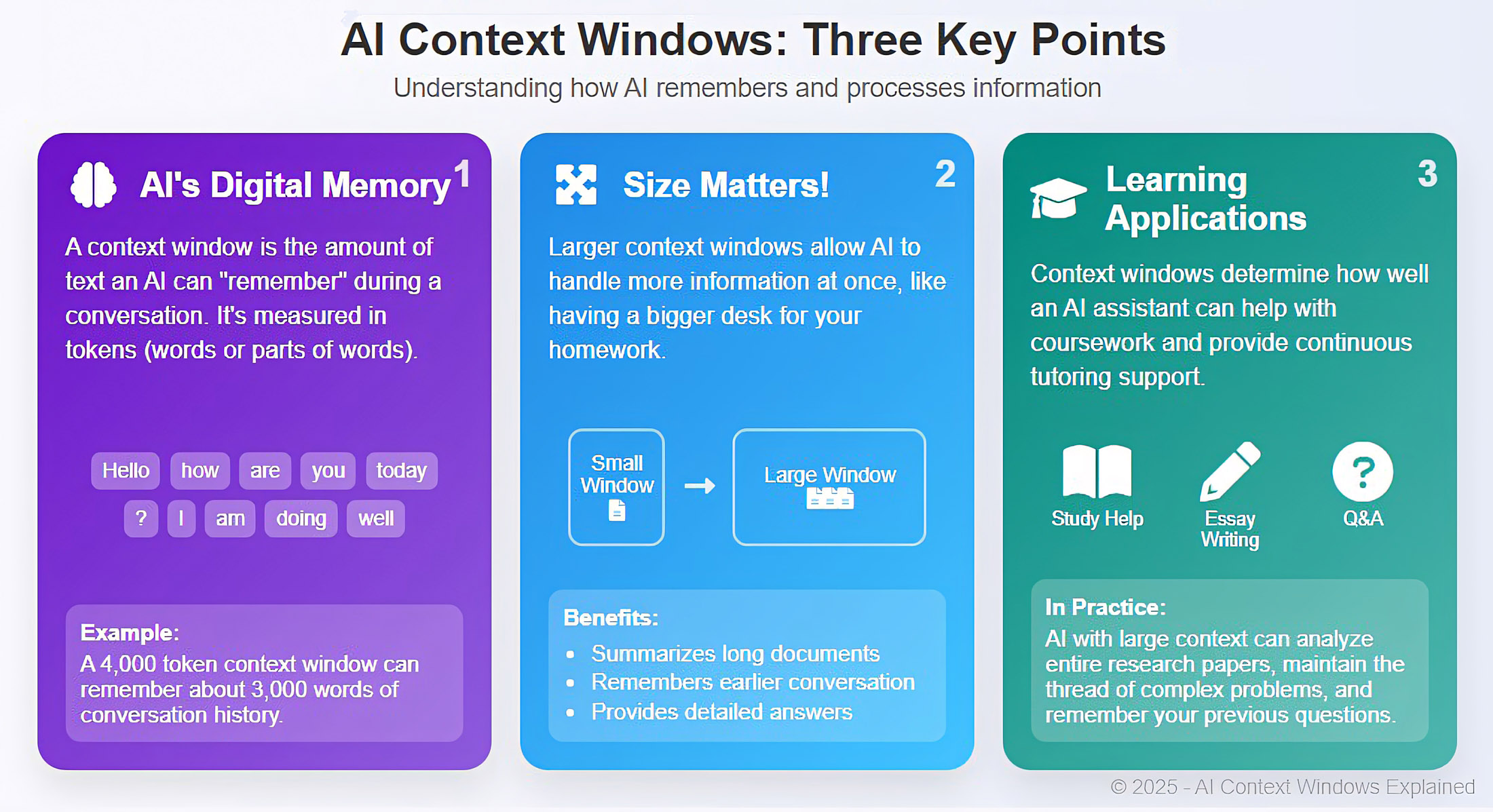

These windows temporarily store chat texts during our interaction with AI models.

By storing chat messages in memory, the model can maintain a consistent understanding of the overall conversation.

Context windows are measured in the total amount of tokens the model can process at any one time.

Its hard to be specific, but in general a token usually representsfour characters of textin the English language.

So for instance 100 tokens would be 75 words.

A common context window for todays mainstream cloud based models is around 128,000 tokens or just over 1200 words.

Clearly the latter would be extremely frustrating.

The size of a context window is also important for web search and recommendation requests.