When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

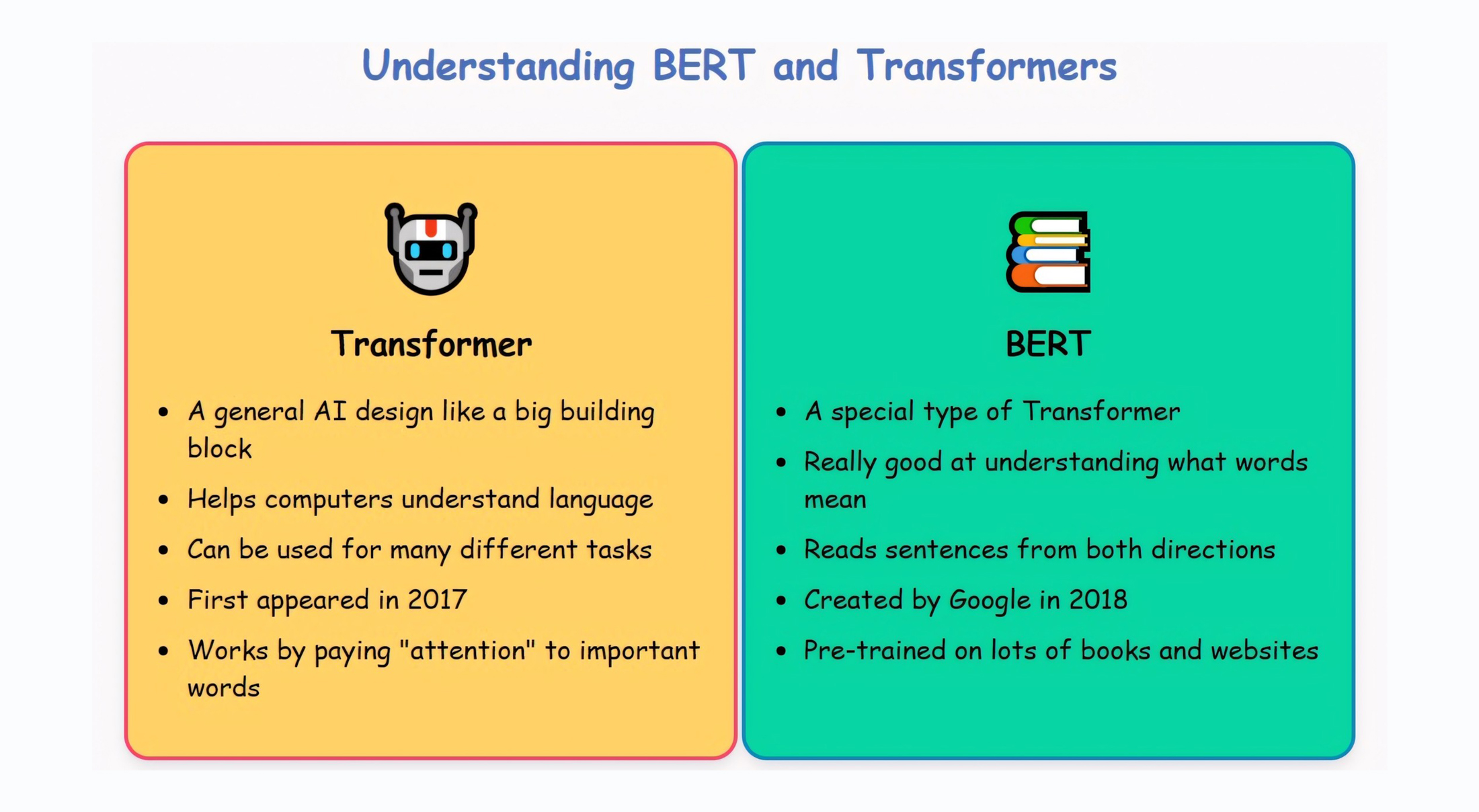

BERT stands for Bidirectional Encoder Representations from Transformers.

The key difference lies in two words, bidirectional and autoregressive.

This bidirectional context enables BERT to generate more accurate and contextually relevant representations of words…

In practice BERT and GPT are often used together in user facing applications.

Variousversions of BERThave been introduced over time to address specific needs and improve performance in different system domains.

These variations allow developers to choose the most suitable model for their particular applications.