When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

The reason they need so much power is to cope with a wide range of general purpose applications.

But theres a cost to all this power, as you might expect.

How does it work?

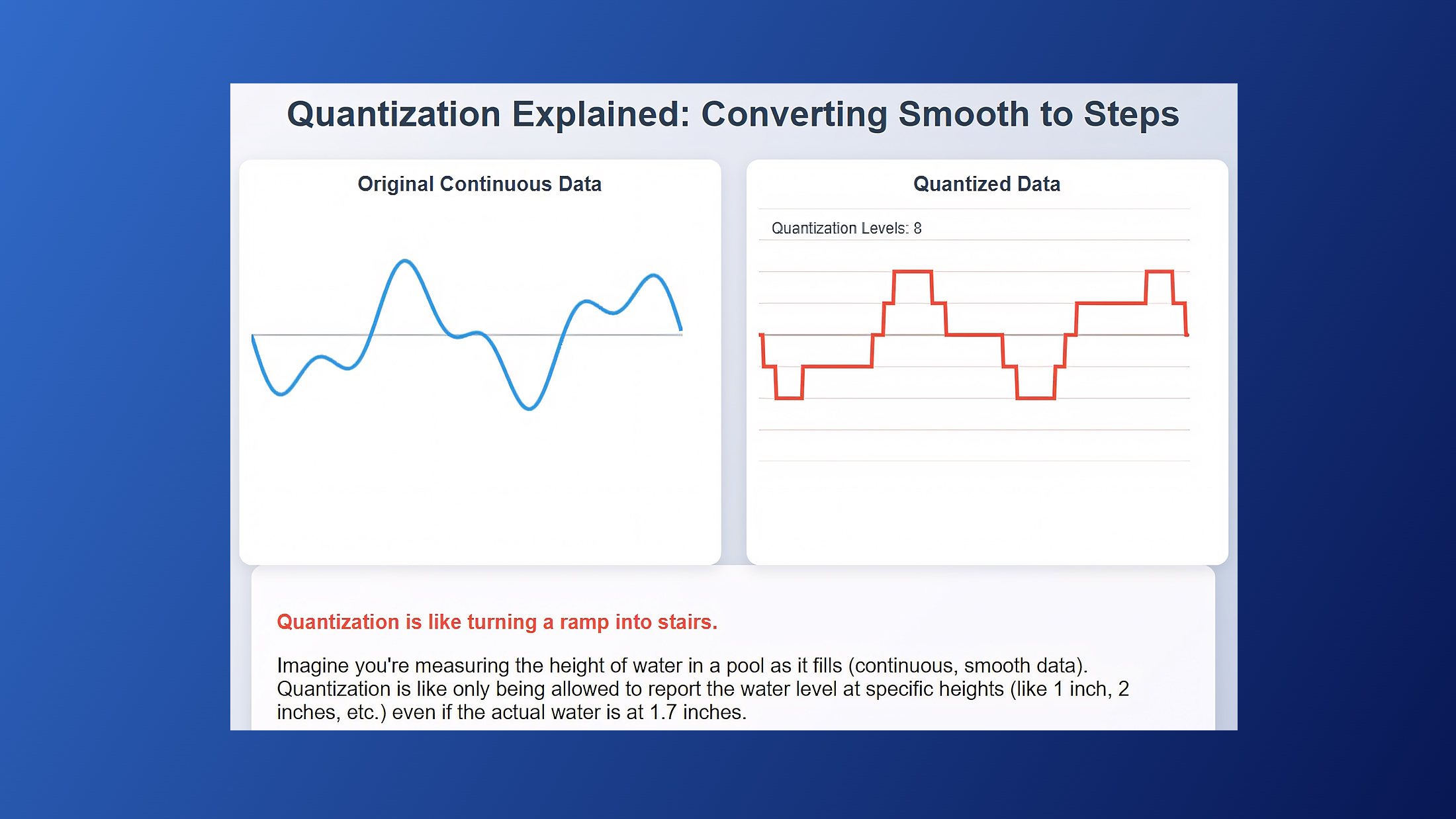

Quantization basically reduces the precision of the numbers used in a neural web link.

While this sounds like were deliberately making it inferior, it’s actually an excellent compromise.

Base models typically use 32-bit floating-point numbers (FP32) to represent the weights and biases of their parameters.

Remember photo compression?

This has enormous implications for accessibility in regions of the world with limited connectivity or computing resources.

One of the major beneficiaries of this improvement is the open source community.