When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

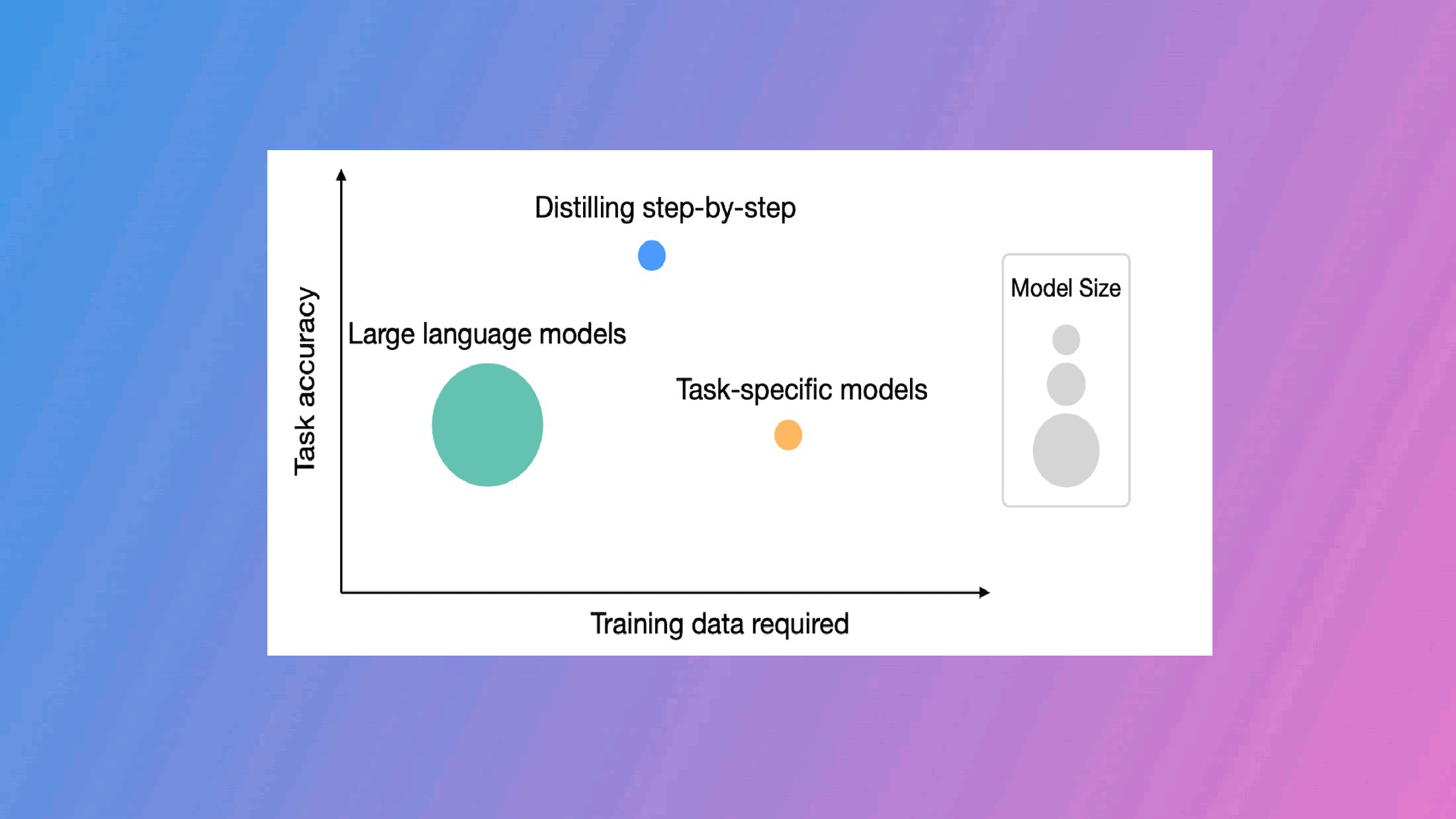

Distillation works by using the larger teacher model to generate outputs that the student model then learns to mimic.

It is not just the open source sector that uses distillation.

These companies also often providedistillation toolsto their top clients to help them create smaller model versions.

Distillation vs Fine-Tuning

It should be noted that distillation is completely different from fine-tuning.

Interestingly enough, both distilled and fine-tuned models can sometimes outperform their larger brethren on specific tasks or roles.

That is not necessarily the case with fine-tuned models.

There are three main methods of distillation, response, feature and relation based techniques.

And each approach offers benefits and disadvantages in terms of the quality of the resulting student model.

It also opens the door to more private and secure AI applications.

There are other advantages that come from distillation.

The smaller models run faster and use significantly lower energy.

They also run in a much smaller memory footprint and can be trained for specialized tasks.