When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

They might be brilliant about anything that happened before their coma but clueless about everything since.

That’s basically what an AI without search is like.

The most revealing tests are those that mimic real-world usage scenarios.

Calendar

I started with a test about the news and ongoing events.

The chatbots all started their tests with a style they mostly maintained throughout.

ChatGPT was incredibly brief in its answer, just three sentences, each mentioning upcoming missions without much detail.

I can’t really fault any of the others, but the style fits the question.

They were all in agreement about the 1950 population, however.

What’s happening?

This is where things get tricky for AI assistants.

There was some real divergence on this one.

Perplexity and Claude maintained their accuracy and style of a numbered list and a more conversational discussion.

Still, Claude notably went for breadth over depth and sounded more like Perplexity.

Gemini truly went off from its rivals and essentially declined to answer.

It was more like doing a regular Google search that way.

ChatGPT, meanwhile, came back with what I might have expected from Gemini.

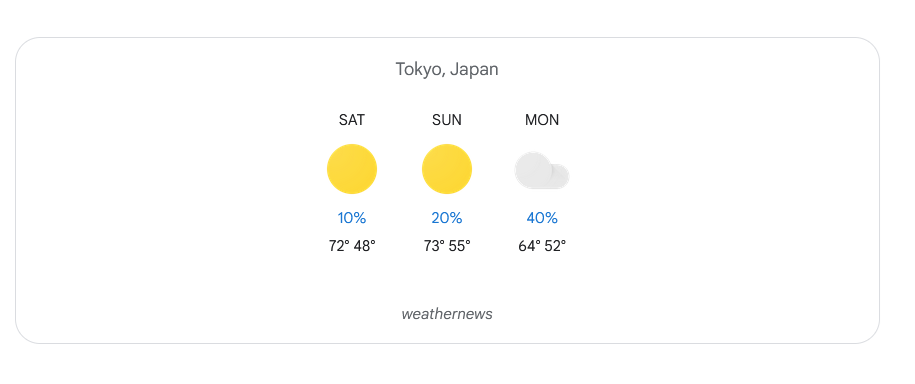

I asked the AI chatbots:“What’s the weather forecast for Tokyo for the next three days?

“The responses were almost the inverse of the Vancouver query.

With nothing added, Google Gemini won me over with its colorful information graphic.

This task requires a flexible search feature and the ability to make sense of diverse viewpoints.

I decided to see how it did with:“Summarize professional critics' reviews of the latestPaddingtonfilm.”

It’s the difference between a simple aggregation of opinions and a thoughtful synthesis that captures the critical consensus.

Still, its failure with the event schedule really put me off despite its otherwise fine performance.

Another surprise for me is that ChatGPT comes in third.

That’s not an issue with Perplexity.

The numbered lists were very clear, and the citations were almost too extensive.

I did not expect Claude to be at the top of this list.

That sense vanished during this test.

AI assistants are tools, not contestants in a reality show where only one can win.

Different tasks call for different capabilities.