When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

High-bandwidth memory is essential for AI andcloud computing, offering high-speed data transfer and efficiency for demanding workloads.

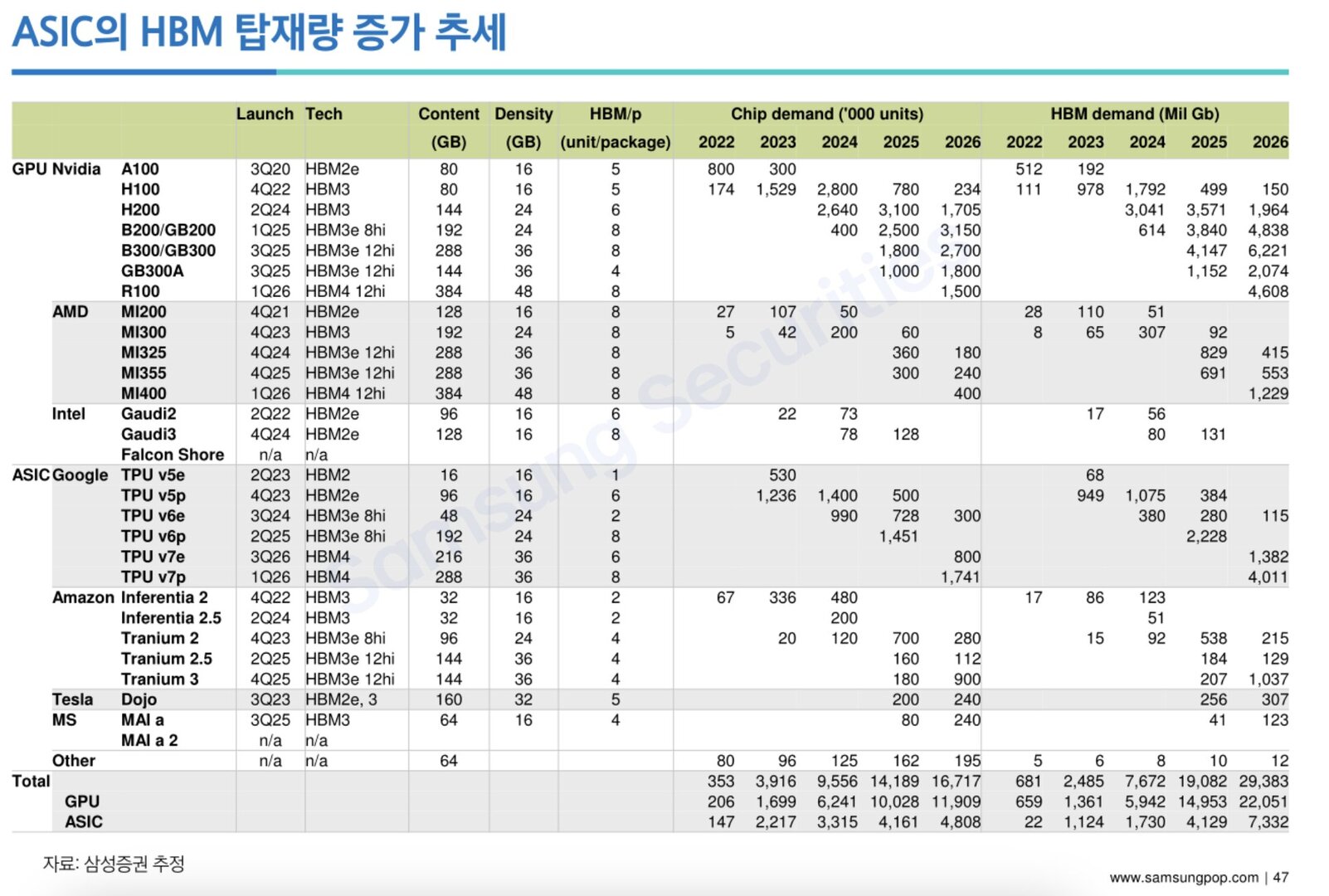

The B200 and GB200, using 192GB of HBM3e (8hi), will require 3.15 million units.

The R100, expected to sport 384GB of HBM4 (12hi), will need 1.5 million units.

TPU v7p alone is expected to require 1.7 million units in 2026.

Trainium 3 alone is expected to require 900,000 units that year.

AMDis largely seen as Nvidias closest rival, but it trails far, far behind.

The companys total HBM demand is projected to reach 820,000 chips in 2026.

Intelis also struggling to compete.

Of course, these numbers only cover HBM supplied by Samsung.