When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

What fascinated me is that my friend has no illusions about generative AI’s accuracy.

The next thing he said surprised me not because it isn’t true, but because he acknowledged it.

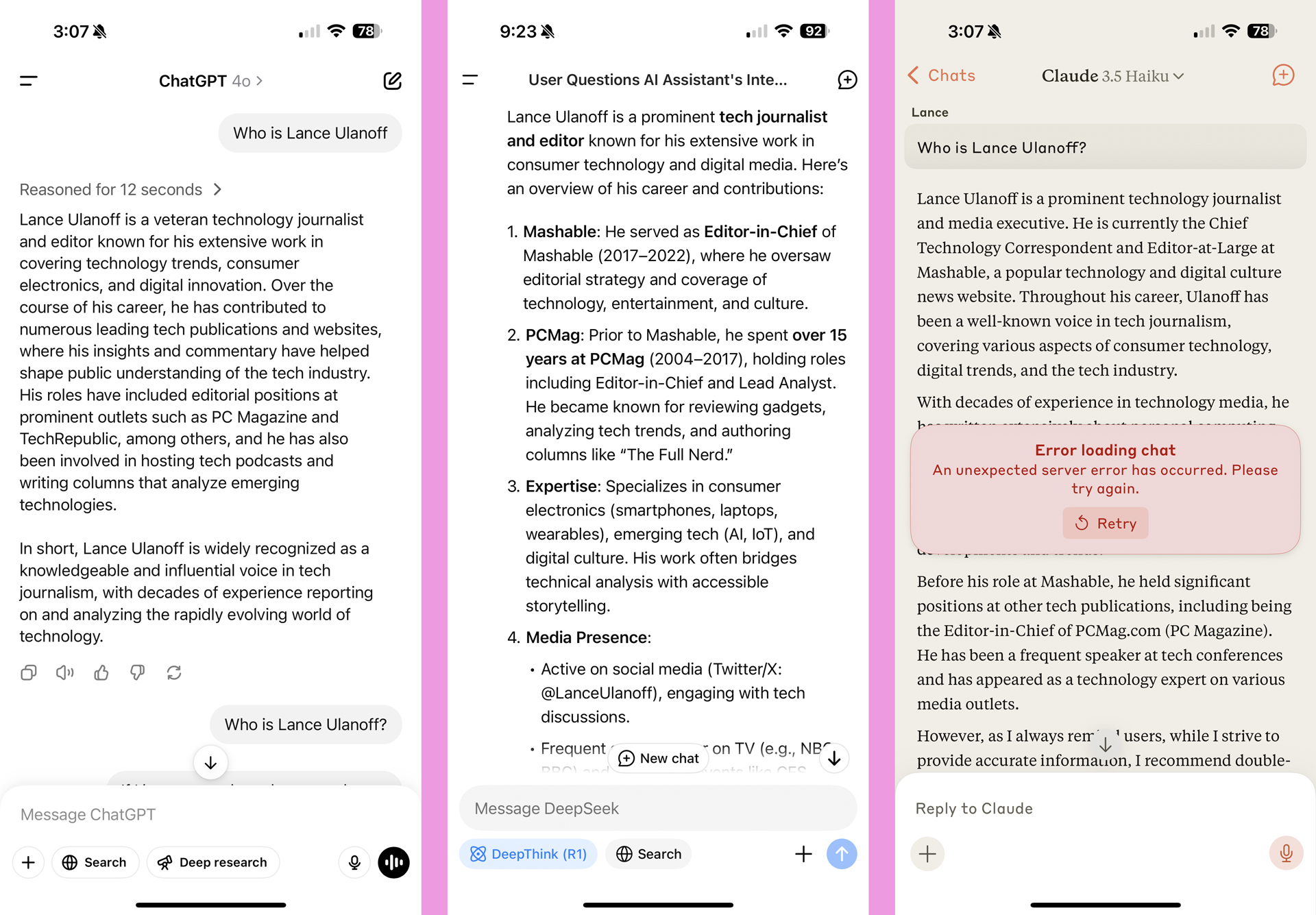

ChatGPT had me working at a place I’ve never worked (left). DeepSeek couldn’t get the dates right (center), and ClaudeAI (right) also had timeline issues.

Eventually, AI won’t hallucinate, it won’t make a mistake.

That’s the trajectory and we should prepare for it.

The future is perfect

I agreed with him because this has long been my thinking.

ChatGPT had me working at a place I’ve never worked (left). DeepSeek couldn’t get the dates right (center), and ClaudeAI (right) also had timeline issues.

The speed of development essentially guarantees it.

These were minor errors and not of any real importance because who cares about my background except me?

That’s close to “TechRadar,” but no cigar.

DeepSeek, the Chinese AIchatbotwunderkund, had me working at Mashable years after I left.

It also confused my PCMag history.

Google Geminismartly kept the details scant, but it got all of them right.

ChatGPT’s 4o model took a similar pared-down approach and achieved 100% accuracy.

Claude AIlost the thread of my timeline and still had me working at Mashable.

However, research is showing that models arenot only getting larger, they’re getting smarter, too.

A year ago,one study found ChatGPThallucinating 40% of the time in some tests.

Older models like Meta Llama 3.2 are where you’re free to head back into double-digit hallucination rates.

Hallucination-driven errors are likely creeping into all sectors of home life and industry and infecting our systems with misinformation.

They may not be big errors, but they will accumulate.

After all, why should we have to clean up AI’s messes?